Back in 2019 Apple made another radical step towards the future - they dropped 32-bit app support starting with macOS Catalina They didn't do this out of nowhere, or course, the developers were notified beforehand and had some time to update their apps for 64-bit. Most of the popular apps were ready for Catalina, but some weren't.

One of the apps I used to use a lot was MixMeister BPM Analyzer - a small macOS app most useful for DJs and people who organize their local music library. You could simply drop an .mp3 into the app, it would scan the song's tempo and write a BPM (beats per minute) value into the ID3 tag - this way you could scan a playlist or even your whole music library, and while preparing a DJ set or performing you could easily sort your playlist by BPM, making it easier to find the right song to mix. I don't DJ very often, but I like keeping my library well-organized, it makes it super easy to prepare or play a set.

With macOS Catalina the app stopped working. With each new song I added to my library I'd still look for an album cover, lyrics and other information to make my library nice and clean - I've been doing that ever since I got an iPod Nano 3. One of the tags Music (and iTunes before it) lets you edit manually is BPM - so for the songs I would add to my DJ sets I would have to manually scan the BPM with some 3-rd party tool (I used Algoriddim's DJ Pro) and add it by hand for each song. I waited for MixMesiter to update their app or someone else to come in and take the place but it's been a while and there're no native alternatives for this tiny app.

I decided to take matters into my own hands

I realized that the best tools for specific tasks like this are self-made - I know what I need and I have some kind of understanding on how to make it work. I would make this app myself.

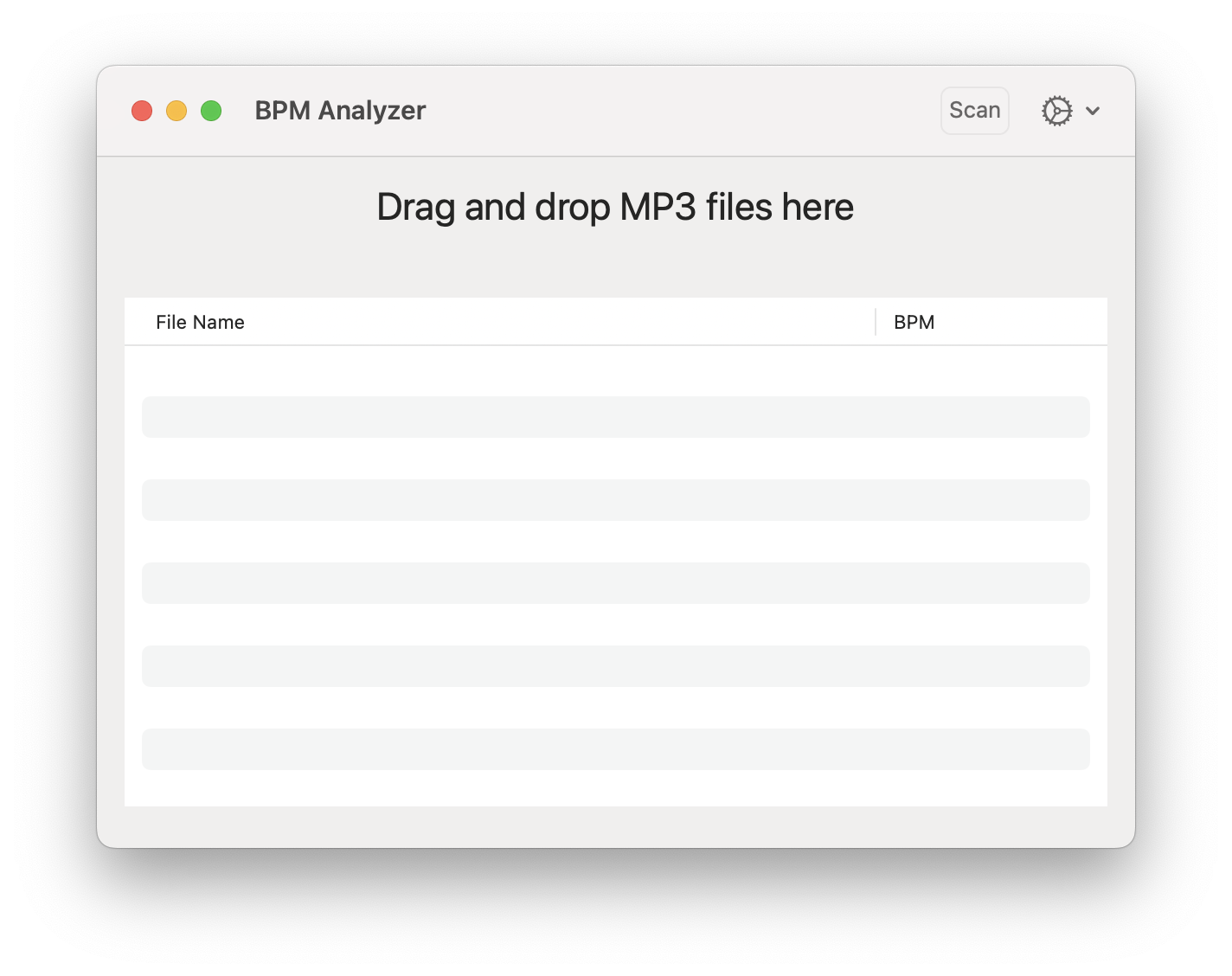

Making UI was the easiest part - luckily, SwiftUI is fairly easy to get back into after a while, and the app would have a very simple interface - it would be made of Table where you could drag and drop your .mp3 files, they would make a nice list. I was inspired by The Table would have 2 columns - one for the name and one for the BPM value. On top of the table there would be a "Scan" button and other configuration items - I would add them while developing the app and thinking of new features I'd like to have.

I didn't have to think about the UI too much - the old MixMeister's design was good enough, I just made it native-looking with default SwiftUI macOS UI. Xcode's SwiftUI Preview feature helps a lot too - a basic prototype took a couple of hours, the app looked somewhat ok.

It was still a prototype - pressing "Scan" would trigger this placeholder function:

func detectBPM(for file: String) -> Float? {

// TODO: - Actual analyzer implementation

return .random(in: 1...10)

}All I had left was the the most important part - actual tempo analysis.

Alright, it couldn't be that difficult - there're a lot of similar tools, I'll probably find a working library just for that

- Me, probably

Popular Python libraries wouldn't work because I wanted a native SwiftUI app but in theory I could go for C or C++. The first thing I found was this public repo with exactly what I was looking for - a local file analyzer. It used Superpowered - a powerful C++ audio library that had exactly what I needed - a BPM analyzer. Despite the fact that the last commit was 7 years ago it was easy to build and run the app. It's the best part of Objective-C that I truly miss - it's fast and reliable. Try doing that with a Swift project that's older than two days - you'll have to fix some code to build it.

The problem was that this app was iOS only, of course. A simple "Copy BMPAnalyzer.swift and Superpower directory to your project" did not work in my case - I was building for 64-bit macOS, and I needed a specific library build.

I don't have enough knowledge on how libraries and frameworks work, especially when it comes to C++ and Swift interoperability. This public repo with tons of exampels from Superpowered did not help either. I would need to build the library myself and I was unsuccessful with it.

A bit of AI

In my work and other tasks I try no to rely too much on help from AI-assistants. But to be fair - they're getting pretty good. In 2024 we have access to ChatGPT 4o - the flagship model from OpenAI, but also Gemini and Claude from Anthropic. Earlier this year I decided to use these tools more often, at first - to form a habit, because a lot of my friends sometime don't even think of using them despite how popular, effective and - most importantly - free they are. At first I'd only use the free tools, but later bought a ChatGPT subscription to try a more advanced model. I have shared access with family and now it's very visible that multiple people talk to the bot just by looking at the chat history. In a couple of months I cancelled my paid subscription because the new model - 4o - became available for free, and even with a paid service I'd sometimes encounter a limit - from my understanding the service had a lot of requests and wasn't able to deal with everyone's stuff. For this project, I tried using all 3 models for the same task and got the best result with the free Claude mode (Claude 3.5 at the moment) so I stuck with that most of the time. Gemini wasn't much of a help, it kept losing the scope and couldn't work with files, which Claude and ChatGPT did.

My idea was to make my own bpm analyzer tool with AI's help and some basic research in native Swift so I could refrain from importing any 3-rd party libraries as much as I could. I'd still use one - ID3TagEditor - a nice SPM package to deal with ID3 tags in the .mp3 files.

Another idea - to train a ML-model just for this specific task - was quickly abandoned for later - even though I had a solid collection of about 3000 songs with correct BPM values I didn't know much about ML training besides Apple's own CoreML - it's good for specific purposes, but not for mine. Hopefully I can come to this idea later in the future.

But AI tools aren't programmers - they are language models and the way they work is basically word juggling that seems consistent and full of reason at first sight. So my first algorithm prototypes weren't working at all - I mean, a 3000 BPM isn't exactly close to 128 BPM.

So I did another thing - looked for simple analyzers written in C, Python or any other languages to rewrite them in Swift. Homebrew helped me find bpm-tools - a tool by Mark Hills written in C (with the latest update in 2013, but that's not a problem when it comes to relatively simple algorithms and C) which was pretty easy to convert to Swift.

How the algorithm works

At this time, the algorithm consists of two different approaches combined together for a more accurate result:

Frequency-based approach

Using spectral analysis to detect the BPM and potentially give insight into the harmonic structure and pitch-related features.

- Divide the audio into overlapping frames

- Apply a Hanning window to each frame

- Compute the volume (energy) of each frame

- Calculate the differences between consecutive frame volumes

- Use Fourier analysis to find periodicities in the volume differences

- Identify potential BPM candidates based on correlation scores

- Find local maxima among the candidates to determine the most likely BPM values

Time-based approach

Using autocorrelation in the time domain to detect BPM, focusing more on rhythm and timing

- Compute energy levels of the audio signal at regular intervals

- Use

autodifference()to compare energy levels at different time offsets - Scan through a range of possible BPM values using

scanForBpm() - Calculate scores for each potential BPM

- Select the BPM with the highest score as the primary BPM

- Choose diverse approximate BPM values as alternatives

Combining both approaches

Integrating results from both Frequency and Time approaches:

- Combine BPM candidates from both approaches

- Add octave-corrected values (double and half of each candidate)

- Implement a voting system to weigh candidates

- Score candidates based on their presence in approximate values lists

- Select the primary BPM and top approximate values

Combining both approaches allows for a more robust and comprehensive analysis of the audio, ensuring both the ‘what’ and ‘when’ are captured effectively.

For further research

BeatRoot - open-source beat tracking application by Simon Dixon. Absolutely massive paper explaining BeatRoot: Evaluation of the Audio Beat Tracking System BeatRoot I've even tried reading this paper but my mathematical comprehension stops around here:

$$ X(n, k) = \sum_{{m = -\frac{N}{2}}}^{{\frac{N}{2} - 1}} x(hn + m) \cdot w(m) \cdot e^{-\frac{2j\pi mk}{N}} $$

(It's some LaTeX formatted math within Markdown, should be readable in a markdown reader)

BeatRoot has been converted to other languages, but I haven't checked them out yet: Java version Python version

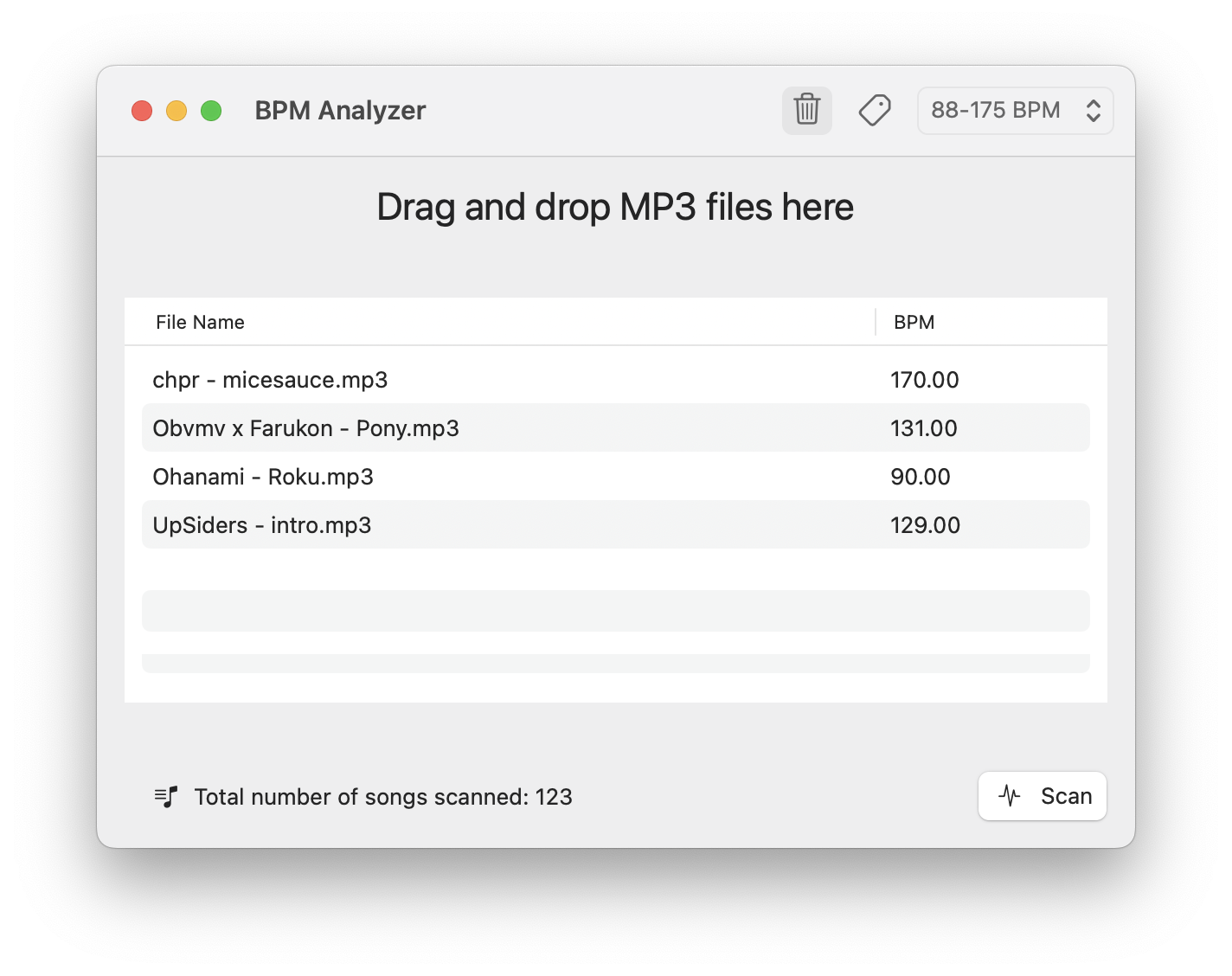

This is the final look of the MVP I've rfeleased to the Mac App Store - check it out

I've also created a simple landing page to showcase the app.

I've also created a simple landing page to showcase the app.

This was a great project, I'm glad I've finally done it because I needed this utility myself and at the moment it's the only bpm analyzer app for Mac, and it's free! I might add a "Tip me" option to see if it can make anything, I'd need to figure out StoreKit and fix the banking details in my account.

But I also have a project idea I've been trying to make for over a year now, I think it's time to go deep into backend development...